Financial Regulatory Compliance AI 2025

Financial services AI compliance guide: SOC 2, PCI DSS 4.0.1, GDPR, FINRA requirements. Penalties up to €35M. Learn automation strategies reducing compliance costs 65%.

Key Takeaways

- Regulatory Penalties: Non-compliance under the EU AI Act can result in fines of up to €35 million or 7% of global turnover, making proactive AI governance a business-critical priority.

- Workflow Automation: AI-powered RegTech can automate over 60% of compliance workflows, including 100% audit review coverage, reducing total operational compliance costs by up to 65%.

- Risk-Based Framework: The EU AI Act classifies financial credit scoring and life insurance pricing as “High Risk,” requiring strict data governance and human oversight for all deployments.

- Explainability Mandates: GDPR Article 22 grants customers the “Right to Explanation,” necessitating the use of Explainable AI (XAI) to provide transparent rationales for all automated decisions.

What is Financial Services Regulatory Compliance?

Financial services regulatory compliance refers to the mandatory adherence to laws, regulations, guidelines, and specifications established by governing bodies—such as the SEC, FINRA, FCA, and the EU—to maintain the integrity, safety, and stability of the global financial system. In 2025, compliance is shifting toward “Active Intelligence,” leveraging AI to automate 60%+ of workflows and provide real-time monitoring of transactions and communications to prevent violations of the EU AI Act, GDPR, and PCI DSS 4.0.1.

Quick Facts

- EU AI Act Penalty: Up to €35M or 7% of turnover

- Compliance Cost Reduction with AI: 65%

- Workflow Automation Potential: 60%+

- Audit Review Coverage: 100% (AI) vs 5% (Manual)

- Risk Mitigation Speed: Minutes (AI) vs 24+ Hours (Manual)

Key Questions

What are the biggest regulatory risks for AI in finance?

The biggest risks include “Proxy Discrimination” (unintentional bias in credit scoring), Model Decay (where accuracy drops as economic conditions change), and lack of “Explainability” (violating the customer’s right to an explanation under GDPR Article 22).

Can AI automate the entire financial compliance process?

While AI can automate 60-70% of data-heavy workflows like transaction monitoring and policy-to-code mapping, human Compliance Officers remain essential for high-level risk decisions and ethical oversight.

What is the “Right to Explanation” in AI?

Under GDPR Article 22, customers have the right to receive a plain-language explanation of how an automated system (like an AI credit scorer) reached a specific decision and the right to request a human review of that decision.

Quick Answer

Financial services regulatory compliance in 2025 centers on “Active Intelligence,” where AI monitors 100% of transactions and communications in real-time to ensure adherence to the EU AI Act, GDPR, and PCI DSS 4.0.1. By automating over 60% of manual compliance workflows, institutions can reduce typical operational costs by 65% while fulfilling critical mandates like the “Right to Explanation” and maintaining 24/7 audit readiness across complex global jurisdictions.

Common Questions

What are the new penalties for AI non-compliance?

They are existential.

- EU AI Act: Up to €35M or 7% of global annual turnover (whichever is higher) for prohibited AI practices.

- GDPR: Up to €20M or 4% of global turnover.

- Reputational Damage: Incalculable. Customers lose trust instantly if their data is misused.

What is “RegTech” and why is it growing?

RegTech (Regulatory Technology) is the use of AI to solve compliance challenges at scale. The market is exploding from $15B to $107B because human compliance teams can no longer keep up with the volume of data.

- Manual: Reviewing 5% of communications for insider trading.

- RegTech: Reviewing 100% of communications in real-time.

Does AI replace the Compliance Officer?

No. It gives them “superpowers.” Instead of spending 80% of their time gathering data for spreadsheets, officers spend 80% of their time making high-level risk decisions based on the data the AI gathered for them.

Deep Dive: The Global Regulatory Framework

Understanding the specific laws is the first step to compliance.

1. The EU AI Act (The World’s First Comprehensive AI Law)

Passed in 2024, this sets the standard. It adopts a Risk-Based Approach:

- Unacceptable Risk (Banned):

- Social Scoring (like China’s system).

- Real-time Biometric ID in public spaces (Face recognition by police).

- Sub-liminal manipulation techniques.

- High Risk (Strictly Regulated):

- Credit Scoring (Determining access to loans).

- Life & Health Insurance pricing.

- Hiring algorithms (Resume scanners).

- Requirements: These systems must have high-quality data governance, documentation, human oversight, and robustness testing.

- Limited Risk (Transparency):

- Chatbots / Deepfakes.

- Requirement: You must tell the user, “You are talking to a machine.”

- Minimal Risk: Spam filters, Games (No restrictions).

2. GDPR (General Data Protection Regulation)

It’s not just about cookies.

- Article 22: “The Right to Explanation.” If an AI denies a loan, the customer can demand a human review and an explanation in plain language.

- Data Minimization: You can only train on data necessary for the purpose. You cannot hoard data “just in case.”

- The Right to be Forgotten: If a customer leaves, can you delete their data from the AI’s memory? (This is technically hard and requires specific “Machine Unlearning” protocols).

3. US Consumer Protection Laws (Fair Lending)

While the US lacks a federal “AI Act,” existing laws apply strictly to AI.

- ECOA (Equal Credit Opportunity Act) & Fair Housing Act:

- Rule: You cannot discriminate based on race, color, religion, national origin, sex, marital status, or age.

- AI Risk: “Proxy Discrimination.” The AI doesn’t know race, but it uses “Zip Code” which correlates with race. If the AI denies loans to a specific zip code, you are violating ECOA.

- Defense: You must run “Disparate Impact Analysis” (DIA) testing quarterly.

4. Basel III / IV (Operational Risk)

- Focus: Stability.

- New Rule: AI is classified as a “Third Party Dependency” (even if built in-house, it depends on data). You must hold capital reserves against “Model Failure Risk.”

5. Global Regulatory Heatmap: Beyond the EU & US

The world is fragmenting. You need a multi-jurisdictional strategy.

United Kingdom: The “Consumer Duty”

- Focus: Outcomes.

- Rule: You must prove your AI leads to “Good Outcomes” for retail customers.

- AI Trap: If your algorithm up-sells high-interest loans to vulnerable customers, you are liable.

Singapore: FEAT Principles

- Focus: Ethics.

- FEAT: Fairness, Ethics, Accountability, Transparency.

- Status: Voluntary but expected by MAS (Monetary Authority of Singapore).

Canada: AIDA (AI and Data Act)

- Focus: Harm reduction.

- Penalty: Criminal liability for “reckless” deployment of AI that causes harm.

Australia: AI Ethics Framework

- Focus: Human Rights.

- Rule: AI should not de-skill the workforce or violate privacy.

Takeaway: You cannot build one model for the world. You need “Jurisdictional Wrappers” that adjust the AI’s behavior based on where the user lives.

6. A Day in the Life: Manual vs. AI Compliance

See the difference in speed and stress.

The Old Way (Manual)

- 9:00 AM: Chief Compliance Officer (CCO) reads about a new sanction on “Titanium exports from Country X.”

- 10:00 AM: CCO emails the Trade Finance team: “Do we have exposure?”

- 12:00 PM: Trade team manually searches PDFs of bill of ladings.

- 4:00 PM: They find 100 potential matches.

- Day 2: Legal reviews them one by one.

- Result: 24 hours of exposure risk.

The Automted Way (AgenixHub)

- 9:00 AM: AI ingestion engine reads the Sanction Notice.

- 9:01 AM: Knowledge Graph maps “Titanium” to all commodity codes in your trade database.

- 9:05 AM: System flags 3 transactions currently on ships.

- 9:06 AM: Alert sent to CCO dashboard: “3 High-Risk Trades identified. Hold applied automatically.”

- Result: 6 minutes of risk.

How AI Automates Compliance

Automated Model Risk Management (MRM)

- Old Way: Manually writing a 50-page Word doc validating a model.

- New Way: The AI platform automatically generates a “Model Card” detailing training data, bias test results, and performance metrics, updated every time the model is retrained.

Real-Time Communications Surveillance

- Old Way: Keyword search (e.g., flagging “insider info”).

- New Way: NLP (Natural Language Processing) understands context. It knows that “Let’s take this offline” might be suspicious in one context but innocent in another.

Dynamic KYC/AML Updates

- Old Way: Asking customers to re-upload IDs every 3 years.

- New Way: Continuous monitoring of global sanctions lists (OFAC) and adverse media instantly flags if a clean customer becomes high-risk overnight.

3-Minute Risk Assessment

Are your AI controls ready for a regulatory audit?

AI Compliance Risk Assessment

Evaluate your readiness for 2025 financial regulations (SOC 2, GDPR, AI Act).

1. How do you document AI decision-making (Explainability)?

2. Does your system handle 'Right to be Forgotten' (GDPR)?

3. What is your data training environment security?

4. How often do you audit AI models for bias?

5. Is your infrastructure SOC 2 Type II compliant?

Frequently Asked Questions

What is SOC 2 Type II and why do I need it?

It’s the gold standard for trust. Type I proves you designed a secure system. Type II proves you followed your own rules over a 6-12 month period. If you use a cloud AI provider, they MUST be SOC 2 Type II compliant, or you are inheriting their risk.

Can AI explain its decisions (XAI) to regulators?

Yes. We verify all models using SHAP (SHapley Additive exPlanations) values. This mathematical method serves as a “receipt,” showing exactly how much each factor (Income, Age, Dept) contributed to a decision.

What specific controls are needed for GDPR?

You must handle Data Subject Access Requests (DSAR) within 30 days. AI can search petabytes of unstructured data (emails, chat logs) to find every mention of “John Smith” instantly, a task that is impossible manually.

Is “Open Source” AI safe for banking?

It carries risk. Using open-source models (like Llama 3) internally is fine, but you must scan them for vulnerabilities (Model Serialization attacks) and ensure you have a “Private Instance” so your data doesn’t leak back to the vibrant open-source community.

Technical Deep Dive: Strategies for Automating Compliance

How do you actually build this?

1. Regulatory Change Management (RCM) with NLP

Regulators publish thousands of pages of new rules annually.

- The Problem: A human compliance officer cannot read the Federal Register, the EU Journal, and FSA updates every morning.

- The AI Solution: An NLP engine ingests these feeds 24/7.

- The Action: It highlights relevant changes. “Alert: Section 404 of SOX was updated. This impacts your ‘Data Retention Policy’.“

2. Policy-to-Code Mapping (The Holy Grail)

- Concept: Linking a written policy directly to the code that enforces it.

- Tech: Knowledge Graphs.

- Example:

- Policy: “No trading on accounts with >$10k balance without manager approval.”

- Graph: Links this text to

function approveTrade(). - Impact: If the policy changes to >$15k, the Graph flags the specific line of code that needs updating.

3. Automated Gap Analysis

- Input: Your current policy documents (PDFs/Word).

- Comparator: The new Regulation (EU AI Act text).

- Output: A “Gap Report” showing exactly where your policy is missing a required clause.

8-Step Implementation Checklist: Being “Audit-Ready”

Phase 1: Governance (Weeks 1-4)

- Establish AI Ethics Committee: Cross-functional team (Risk, Legal, Tech) meeting monthly.

- Model Inventory: Create a centralized registry of EVERY model running in the bank (even the marketing ones).

Phase 2: Visibility (Weeks 5-8)

- Data Lineage: Map exactly where training data comes from. (Can you prove you have consent for every row?).

- Assessment Automation: Deploy the categorization tool (High/Medium/Low risk).

Phase 3: Control (Months 3-6)

- Feature Monitoring: Set up alerts for “Data Drift” (e.g., if the average applicant age drops by 10 years suddenly).

- Bias Testing suite: Automate weekly fairness tests (Disparate Impact).

Phase 4: Response (Months 6+)

- Incident Response Plan: “What do we do if the AI hallucinates and promises a 10% interest rate?” (Kill switch protocol).

- External Audit: Hire a third party to try and break your governance.

The ROI

Learn more about AI ROI Calculator. of Compliance (Beyond Avoiding Fines)

Compliance is expensive. But efficient compliance saves money.

- Reduced Audit Fees: Digital logs save auditors hundreds of hours ($400/hr savings).

- Faster Product Launches: When compliance is automated, “Legal Review” takes days, not months. You get to market faster.

- Trust Premium: Banks with A+ security ratings accrete more deposits.

9. Common Audit Failures: Where Banks Get Caught

The regulator will look for these weak spots.

Failure 1: “The Spreadsheet Trap”

- Scenario: You track model approvals in Excel.

- The Fine: “Lack of immutable audit trail.” Spreadsheets can be edited.

- The Fix: Use a Git-based Model Registry.

Failure 2: “Shadow AI”

- Scenario: Marketing buys an AI tool to write emails without telling IT.

- The Fine: “Unsupervised data leakage.”

- The Fix: Network scanning to detect unauthorized API calls to OpenAI/Anthropic.

Failure 3: “Drift Neglect”

- Scenario: The model was valid in 2022, but hasn’t been re-validated.

- The Fine: “Model Decay.”

- The Fix: Automated re-validation triggers every 90 days.

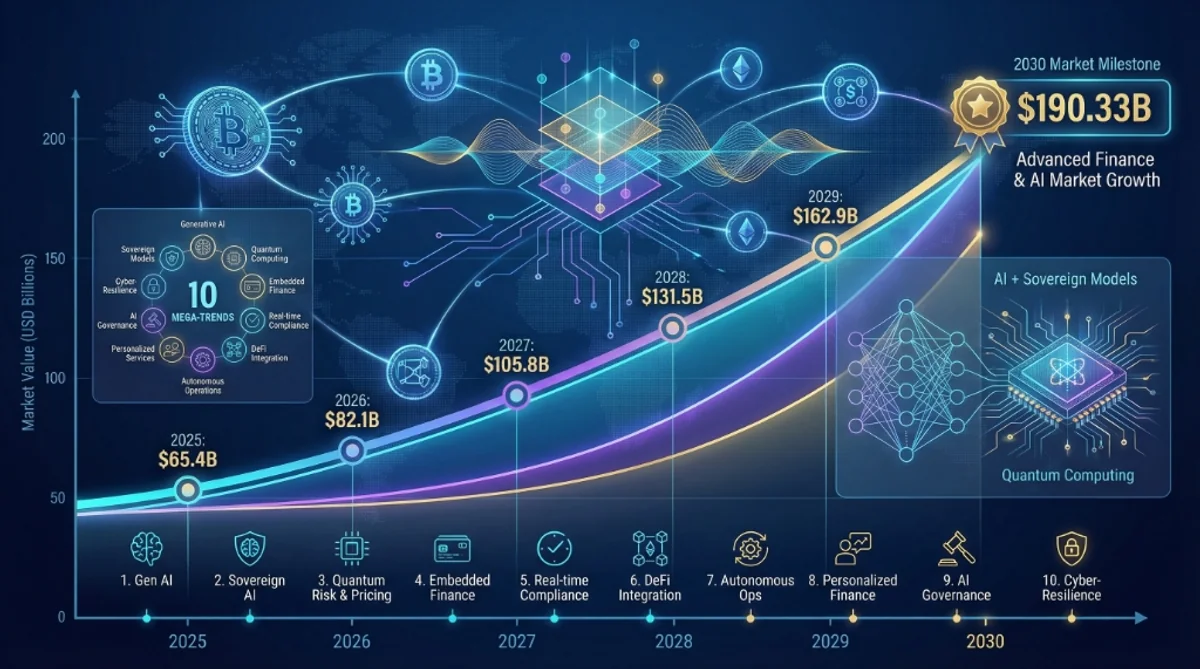

10. The Future of Regulation: 2030 Outlook

What comes next?

- Machine-Readable Regulation: Laws will be published as code (Python/JSON), not PDFs.

- Real-Time Auditing: Regulators will have API access to your bank. They won’t ask for a report; they will pull the data themselves.

- Liability for Agents: If an Autonomous AI Agent makes a trade that loses money, who is liable? The coder? The user? The bank? This legal framework is being written now.

7. Glossary of RegTech Terms

- Model Risk Management (MRM): The framework for managing the lifecycle of AI models (Development, Validation, Deployment, Retirement).

- Explainability (XAI): The ability to describe why a model reached a decision.

- Disparate Impact: Unintentional discrimination where a neutral rule impacts a protected group (e.g., “No loans for people who rent” might hurt minorities more).

- Sandboxing: A safe environment provided by regulators (like the FCA) where you can test innovative AI without fear of fines.

- Human-in-the-Loop (HITL): A safeguard where a human review is required for low-confidence decisions.

8. Buying RegTech: A Due Diligence Checklist

Before you sign a contract with an AI vendor, ask these hard questions.

1. Data Residency

- Question: “Does my customer data ever leave my country?”

- Red Flag: “We process data in a global cloud.” (Violates GDPR/certain banking secrecy laws).

2. Liability

- Question: “If your AI makes a mistake that leads to a fine, do you indemnify us?”

- Red Flag: “Our liability is capped at the cost of the subscription.”

3. Explainability

- Question: “Can you show me the decision tree for a specific alert?”

- Red Flag: “Our proprietary algorithm is a trade secret.”

4. Continuity

- Question: “If your company goes bankrupt, do we get the source code (Software Escrow)?”

- Red Flag: “No.”

Summary

In summary, financial services regulatory compliance in 2025 is a real-time requirement that can no longer be managed through manual checklists and periodic audits. By deploying AI-driven monitoring and “Compliance by Design” frameworks, financial institutions can avoid astronomical penalties and turn regulatory adherence into a foundation for customer trust and operational efficiency.

Recommended Follow-up:

- Financial Services AI Implementation Guide

- Financial Services Customer Experience

- Healthcare AI Implementation Guide

Turn compliance into a competitive advantage: Contact AgenixHub for a confidential Compliance Architecture review.

Don’t wait for the audit. Automate your financial compliance with AgenixHub today.