Financial AI Implementation Guide 2025

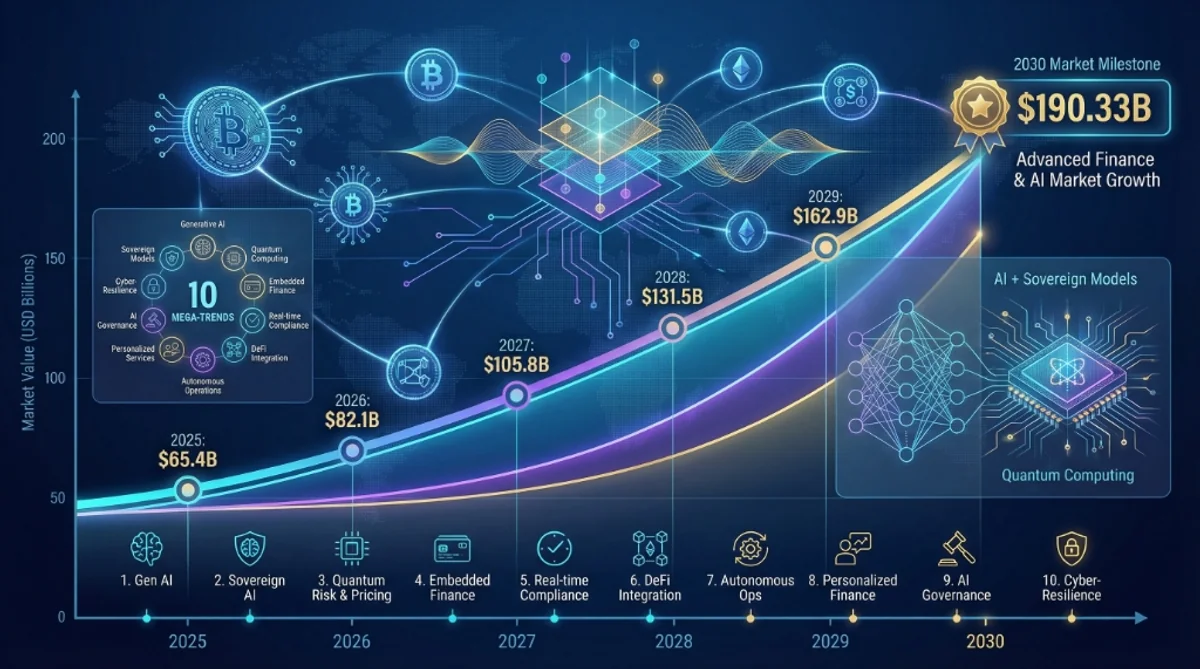

Financial services AI implementation guide: $190.33B market by 2030, 210-600% ROI, compliance requirements (SOC 2, FINRA, GDPR), and proven deployment strategies.

Financial AI Implementation Guide 2025

Key Takeaways

- Regulatory Prioritization: Compliance is a foundational requirement, with SOC 2, PCI DSS 4.0, and GDPR guiding secure on-premises or private cloud AI deployments.

- High-Impact Use Cases: Fraud detection and automated risk assessment offer the fastest ROI (210-600%), typically paying back the initial investment in 3.5 to 9 months.

- Deployment Efficiency: Modern modular platforms enable a shift from multi-year consulting cycles to production-ready pilots within 2-4 weeks at a significantly lower entry cost.

- Privacy-Enhancing Tech: Technologies such as Federated Learning and Customer-Managed Encryption Keys (CMEK) are essential for isolating sensitive financial data from public models.

What is Financial Services AI Implementation?

Financial services AI implementation refers to the strategic deployment of artificial intelligence and machine learning models within banking, fintech, and insurance sectors to automate complex processes including fraud detection, credit risk assessment, and regulatory compliance. It describes how organizations build secure multi-layered architectures using private cloud or on-premises environments to ensure strict adherence to financial regulations such as SOC 2, PCI DSS, and GDPR while achieving measurable operational ROI through modular, compliant-by-design AI systems.

Quick Answer

AI implementation in financial services enables banks and fintechs to automate fraud detection, credit risk, and compliance within 2-4 weeks, delivering 210-600% ROI.

By utilizing secure on-premises architectures and privacy-enhancing technologies, institutions can deploy production-ready systems that strictly adhere to SOC 2 and GDPR while ensuring customer financial data remains isolated from public training sets.

Quick Facts

- Implementation Cost: $25K-$100K (Pilot)

- Typical ROI: 210-600%

- Payback Period: 3.5-9 months

- Compliance Standards: SOC 2, PCI DSS 4.0, GDPR

- Deployment Speed: 2-4 weeks (AgenixHub)

Key Questions

How much does AI implementation cost for financial institutions?

For a production-ready pilot, initial implementation typically costs between $25,000 and $100,000, significantly lower than traditional enterprise consulting due to modular AI platforms.

Is AI implementation in banking secure enough for customer data?

Yes, provided it uses Privacy-Enhancing Technologies (PETs) like Federated Learning and Customer-Managed Encryption Keys (CMEK), ensuring that sensitive data remains isolated and never trains public models.

What is the ROI timeline for financial AI?

Most financial institutions achieve a full return on investment within 3.5 to 9 months by prioritizing high-impact use cases like fraud detection and automated document processing.

Common Questions

How much does AI implementation cost for financial institutions?

Learn more about AI implementation costs.

Between $25,000 and $100,000 for an initial production-ready pilot.

Unlike the multi-million dollar “Big Four” consulting engagements of the past decade, modern AI implementation is modular.

- Pilot Phase: $25K-$50K (Proof of value, 4-6 weeks).

- Full Deployment: $100K+ (Enterprise integration, scaling).

- Ongoing: SaaS subscription or maintenance fee (often less than 20% of the value generated).

What about data security and compliance?

Compliance is a feature, not a bug.

AgenixHub and similar enterprise platforms are built “Compliance-First.”

- SOC 2 Type II: Ensuring rigorous controls over data privacy.

- PCI DSS 4.0.1: Latest standards for cardholder data. In 2025, the regulatory landscape is active. You cannot deploy “black boxes.”

- GDPR Article 22: The “Right to Explanation.” Citizens have the right not to be subject to a decision based solely on automated processing. Your AI must provide a “Human in the Loop” option.

- EU AI Act: Classifies credit scoring and life insurance as “High Risk,” requiring strict conformity assessments and data governance.

- Private AI: Models can run On-Premises or in a Private Cloud (VPC), ensuring customer financial data never trains public models like ChatGPT.

Technical Architecture: The Financial AI Stack

You don’t just “install AI.” You build a stack.

1. The Data Layer (The Vault)

- Vector Databases (e.g., Pinecone, Milvus):

- Use Case: Storing millions of regulatory documents.

- Why: Enables “Retrieval Augmented Generation” (RAG). When a compliance officer asks, “Is this compliant with Basel III?”, the AI retrieves the exact text before answering, reducing hallucinations to near zero.

- Knowledge Graphs:

- Use Case: Anti-Money Laundering (AML).

- Why: Maps relationships. “User A sent money to User B, who shares an IP address with Sanctioned Entity C.” Relational databases miss this; Graphs catch it instantly.

- Legacy Core wrappers:

- Use Case: Talking to Fiserv/Jack Henry/Mainframe.

- Tech: API Gateways that translate modern REST/gRPC calls into COBOL/ISO 8583 messages.

2. The Privacy Layer (PETs)

Privacy-Enhancing Technologies (PETs) are non-negotiable in finance.

- Federated Learning:

- Scenario: a global bank wants to train a fraud model on data from London, New York, and Singapore.

- Problem: GDPR and data residency laws prevent moving data across borders.

- Solution: The model travels to the data. It trains locally in London, gets smarter, and sends only the weights (math) back to HQ, not the customer PI data.

- Homomorphic Encryption:

- Scenario: Analyzing data while it is still encrypted.

- Magic: You can perform calculations on the encrypted data (Ciphertext) and decrypt the result, never seeing the raw data.

3. The Governance Layer (Model Risk Management)

- Drift Detection:

- Trigger: “The economic environment changed (e.g., Inflation up).”

- Action: The AI alerts that its training data (2020-2024 low inflation) is no longer valid.

- Bias Auditing:

- Requirement: Fair Lending Laws.

- Action: Automated tests run every night to ensure the model doesn’t reject loans based on zip code (proxy for race) or gender.

What is the ROI timeline

Learn more about how long AI implementation typically takes.?

Fast. Typically 3.5 to 9 months.

- Fraud Detection: Immediate impact. Stopping one $50K fraudulent wire transfer pays for the system.

- Customer Support: 3-6 months. Deflecting 30% of calls allows staff to focus on high-value sales.

- Document Processing: 6-9 months. Automating mortgage/loan origination review saves thousands of man-hours.

Strategic Roadmap: From Pilot to Profit

Phase 1: The “Safe” Pilot (Weeks 1-4)

Don’t start with your core trading engine. Start with a high-friction, low-risk process.

- Example: Internal document search (Help employees find policy answers faster).

- Goal: Prove the infrastructure is secure without touching critical customer transactions.

Phase 2: Compliance Review (Week 5)

Engage your Risk and Compliance teams early.

- Deliverable: Model Risk Management (MRM) report documenting “Explainability” (Why did the AI make this decision?).

- Requirement: Ensure alignment with FINRA and Consumer Financial Protection Bureau (CFPB) guidelines on non-discrimination.

Phase 3: Core Integration (Months 2-4)

Connect the AI to your transactional systems via secure API.

- Use Case: Real-time fraud scoring on card transactions.

- Verification: Run in “Shadow Mode” (AI flags fraud but doesn’t block it) to tune accuracy and minimize false positives.

Phase 4: Scaling (Month 6+)

Roll out across departments.

- Expansion: Use the same infrastructure for KYC (Know Your Customer) automation and personalized marketing.

- Center of Excellence (CoE): Establish a dedicated AI team that sets standards for the rest of the bank.

The Cost of Inaction: Why You Can’t Wait

Some executives ask: “Why not wait until the technology matures?” Answer: Because your fraud losses won’t wait.

| Risk Factor | Financial Impact |

|---|---|

| Regulatory Fines | $15 Million (Average cost of non-compliance for mid-sized inst.) |

| Fraud Losses | 1-2% of Revenue lost to sophisticated AI-driven attacks. |

| Customer Churn | 30% of Millennials will switch banks for better digital features. |

| Operational Debt | $1.8M/year spent on manual data entry that AI could automate. |

The Asymmetric Risk: The risk of doing nothing (market relevance) is now higher than the risk of doing something (implementation friction).

Vendor Selection: Build vs. Buy?

Should you hire 50 data scientists or buy a platform?

Option A: Build (In-House)

- Pros: Total control. IP ownership.

- Cons:

- Talent War: Hiring a Lead AI Engineer costs $250k - $400k/year.

- Time: 18-24 months to first value.

- Maintenance: You must patch, update, and secure it forever.

Option B: Buy (SaaS / Platform)

- Pros:

- Speed: Deploy in 4 weeks.

- Benchmarks: Benefit from models trained on industry-wide patterns (e.g., universal fraud signals).

- Compliance: Vendor handles the SOC 2 audits.

- Cons: Less customization (unless you choose a hybrid platform like AgenixHub).

Recommendation: Buy the “Plumbing” (Infrastructure, Compliance, Connectors) and Build the “IP” (The specific credit model that makes your bank unique).

Detailed Implementation Checklist (Step-by-Step)

Month 1: Discovery & Data

- Data Audit: Identify where your data lives (Data Lake? Mainframe? Excel?).

- PII Mapping: Tag all Personally Identifiable Information (Names, SSNs) for redaction.

- Define Success: “Reduce Fraud False Positives by 20%.” (Be specific).

Month 2: Infrastructure & Security

- Establish Sandbox: Create a secure PVC (Private Virtual Cloud) isolated from the internet.

- Pentesting: Hire ethical hackers to attack the AI model (Adversarial Robustness).

- Connect APIs: Hook up the Inference Engine to the Transaction Switch.

Month 3: Training & Validation

- Backtesting: Run the model on last year’s data. Did it catch the fraud you missed?

- Bias Testing: Run the “Disparate Impact Analysis” to ensure fair lending.

- Model Card Creation: Document the model’s lineage, limitations, and intended use for the regulator.

Month 4: Shadow Deployment

- Shadow Mode: The AI runs live but gives no decisions. It just logs “I would have blocked this.”

- Analyst Review: Your fraud team reviews the logs. “The AI was right 98% of the time.”

- Calibration: Tweak the thresholds (e.g., “Block if Risk Score > 92”).

Month 5: Go-Live

- Phased Rollout: Enable for 5% of traffic (e.g., Transactions < $100).

- Monitoring: Watch the dashboard for latency or errors.

- Full Switch: Enable for 100% of traffic.

Calculate Your Potential ROI

Financial AI pays for itself through three levers: Risk Reduction, Operational Efficiency, and Customer Retention. Estimate your savings below.

Financial AI ROI Estimator

Estimate typical annual savings based on 2024-2025 industry benchmarks.

Deep Dive: Key Use Cases

1. Fraud Detection & AML

The Killer App. AI analyzes thousands of variables (location, device, time, behavior) in milliseconds to spot anomalies.

- Impact: 97%+ accuracy vs 60-70% for legacy rules-based systems.

- Savings: 50-90% reduction in false positives (saving analyst time).

2. Automated KYC/Onboarding

The Friction Killer. AI uses OCR (Optical Character Recognition) to read passports and facial recognition to verify identity.

- Speed: Verify a user in seconds, not days.

- Result: 50% increase in conversion rates (fewer customers drop out during sign-up).

3. Customer Experience (The “Super-Agent”)

The Growth Driver. AI analyzes transaction history to offer hyper-personalized advice.

- Example: “You spent $200 on fees last month. Switch to our Premium Account to save $150.”

- Result: turning service costs into sales opportunities.

Frequently Asked Questions

Can AI integrate with legacy banking systems?

Yes. We know banks run on COBOL and mainframes. Modern AI platforms use “Wrapper APIs” to talk safely to legacy cores (like Fiserv, Jack Henry, or custom mainframes) without needing a system rewrite.

What is “Explainable AI” (XAI)?

It’s a regulatory requirement. You cannot deny a loan because “the black box said so.” XAI provides the specific “feature importance” (e.g., “Debt-to-Income ratio was too high”) that drove the decision, ensuring you can explain it to regulators and customers.

Is On-Premises deployment still possible?

Absolutely. For many G-SIBs (Global Systemically Important Banks), data cannot leave the building. We support full air-gapped containerized deployments.

How does this impact my workforce?

It elevates them. Bank tellers and support staff move from being “data entry clerks” to “financial advisors.” The AI handles the paperwork; humans handle the relationships.

Can we use Cloud for sensitive data?

Yes, with caveats. We use Bring Your Own Key (BYOK) encryption.

- The cloud provider (AWS/Azure) has the encrypted blob.

- You (The Bank) hold the encryption key on a physical HSM (Hardware Security Module) in your basement.

- Even if the cloud provider is subpoenaed, they can only hand over gibberish.

What is the difference between “Predictive” and “Generative” AI in finance?

- Predictive AI: “Will this customer default on a loan?” (Probability Score 0-100). used for Risk, Fraud, Trading.

- Generative AI: “Write a personalized email explaining why the loan was denied.” used for Service, Marketing, Coding.

Do we need a Chief AI Officer (CAIO)?

For banks >$10B in assets, yes. You need a single executive responsible for the “AI Governance Committee”—the body that decides if a model is safe to launch. For smaller institutions, this role sits under the CTO or CRO (Chief Risk Officer).

4. The “AI Squad”: Who You Need to Hire

You don’t just need “coders.” You need a cross-functional squad.

1. The Machine Learning Engineer (MLOps)

- Role: Keeps the lights on. Ensures the model runs 24/7 with <50ms latency.

- Skill: Python, Kubernetes, Docker.

2. The AI Ethicist / Governance Lead

- Role: The “Brakes.” Checks for bias (Race/Gender) and ensures regulatory compliance.

- Skill: Law, Sociology, Risk Management.

3. The Data Steward

- Role: The “Librarian.” ensure data quality. “Garbage in, Garbage out.”

- Skill: SQL, Data Lineage tools.

4. The Domain Expert (The Banker)

- Role: The “Teacher.” Tells the AI what “Fraud” actually looks like.

- Skill: 20 years of experience in underwriting or compliance.

5. Integration Deep Dive: Taming the Mainframe

The Elephant in the room: Your Core Banking System (likely running on COBOL from 1980). How do we connect modern AI to a Mainframe without breaking it?

Strategy A: The “Sidecar” Database (Safest)

Don’t hit the mainframe for every prediction.

- Replicate: Every night, ETL (Extract, Transform, Load) the day’s transactions into a modern Cloud Data Lake (Snowflake/Databricks).

- Predict: The AI runs batch predictions on the Data Lake.

- Push: Results (e.g., “Customer Risk Score”) are pushed back to the Mainframe as a simple static field.

Strategy B: The API Wrapper (Real-Time)

Use an “Anti-Corruption Layer.”

- Middleware: Install a lightweight API Gateway (MuleSoft/Kong) in front of the Mainframe.

- Translate: The Gateway accepts a modern JSON request from the AI, translates it into an ISO 8583 message, hits the mainframe, and translates the response back.

- Cache: Use Redis to cache frequent requests so you don’t melt the mainframe CPU (which costs $$ per cycle).

Glossary of Financial AI Terms

Speak the language of the future.

- Model Drift: When a model’s accuracy degrades because the real world (e.g., inflation) no longer matches the training data. Requires “Retraining.”

- Feature Engineering: The art of creating data inputs. Finding that “Time since last transaction” is a better predictor of fraud than “Transaction Amount.”

- Reason Codes: Regulatory codes (e.g., “01 - Insufficient Income”) that explain why a credit decision was made. AI must output these.

- Synthetic Data: Fake customer data generated by AI that mathematically resembles real data. Used for testing without risking privacy breaks.

- Human-in-the-Loop (HITL): A workflow where the AI makes a recommendation, but a human must click “Approve” for high-stakes decisions.

Summary

In summary, financial services AI implementation in 2025 is defined by “Compliance by Design” and rapid time-to-value. By choosing modular platforms and focusing on ROI-positive use cases like fraud detection, financial institutions can modernize their operations without the multi-year timelines and massive budgets of the past.

Recommended Follow-up:

- Financial Services Regulatory Compliance

- Financial Services Customer Experience

- Healthcare AI Implementation Guide

Secure your future: Contact AgenixHub for a confidential discussion on secure Financial AI deployment.

Don’t wait for your competitors to lead. Deploy production-ready Financial AI in weeks with AgenixHub.