ISO 26262 & Automotive AI: Complete Compliance Guide

Complete guide to ISO 26262 compliance for automotive AI: ASIL levels, UNECE WP.29 R155/R156 (€30K penalties), ISO 21434 cybersecurity, SOTIF, and data privacy (GDPR/CCPA). On-premises deployment strategies.

What is ISO 26262?

ISO 26262 refers to the international functional safety standard for electrical and electronic systems in road vehicles. It describes a risk-based framework that classifies automotive systems into safety integrity levels, defines requirements for the entire development lifecycle from concept through production, and establishes verification and validation procedures to ensure that safety-critical systems operate reliably and mitigate hazards throughout the vehicle’s operational life.

Quick Answer

ISO 26262 compliance for automotive AI requires:

- ASIL Classification — AI systems must achieve appropriate ASIL levels (A-D) based on risk; ASIL D for safety-critical functions like braking/steering.

- Explainability — AI decisions must be traceable and auditable for functional safety validation.

- UNECE WP.29 R155/R156 — Mandatory CSMS (Cybersecurity Management System) and SUMS (Software Update Management System); penalties up to €30,000 per vehicle, type approval denial in 60+ countries.

- ISO 21434 — Lifecycle cybersecurity engineering with TARA (Threat Analysis and Risk Assessment).

- SOTIF (ISO 21448) — Addressing functional insufficiencies and ODD (Operational Design Domain) limitations.

- Data Privacy — GDPR (€20M or 4% revenue), CCPA ($7,500/violation) compliance for connected vehicle data.

- On-Premises Deployment — Satisfies data residency, audit control, and air-gapped requirements for maximum compliance assurance.

Automotive AI compliance is complex but achievable with a structured implementation guide.

Quick Facts

- Standard: ISO 26262 (Functional Safety)

- Highest Safety Level: ASIL D (<10^-8 failures/hour)

- Mandatory Compliance: July 2024 (UNECE WP.29 R155/R156)

- Penalties: Up to €30,000 per vehicle

- Key Related Standards: ISO 21434 (Cybersecurity), SOTIF (ISO 21448)

Key Questions

What is the most critical ASIL level for automotive AI?

ASIL D is the most critical level, reserved for safety-actuating systems like steering and braking, requiring the highest degree of rigorous validation and fail-safe mechanisms.

How does ISO 26262 address the “black box” nature of AI?

It requires explainability and traceability through Explainable AI (XAI) techniques, ensuring that safety-critical decisions can be audited and validated for functional safety.

What are the consequences of ISO 26262 non-compliance?

Non-compliance can lead to type approval denial, mandatory recalls, and severe financial penalties, including €30,000 per vehicle under related UNECE regulations.

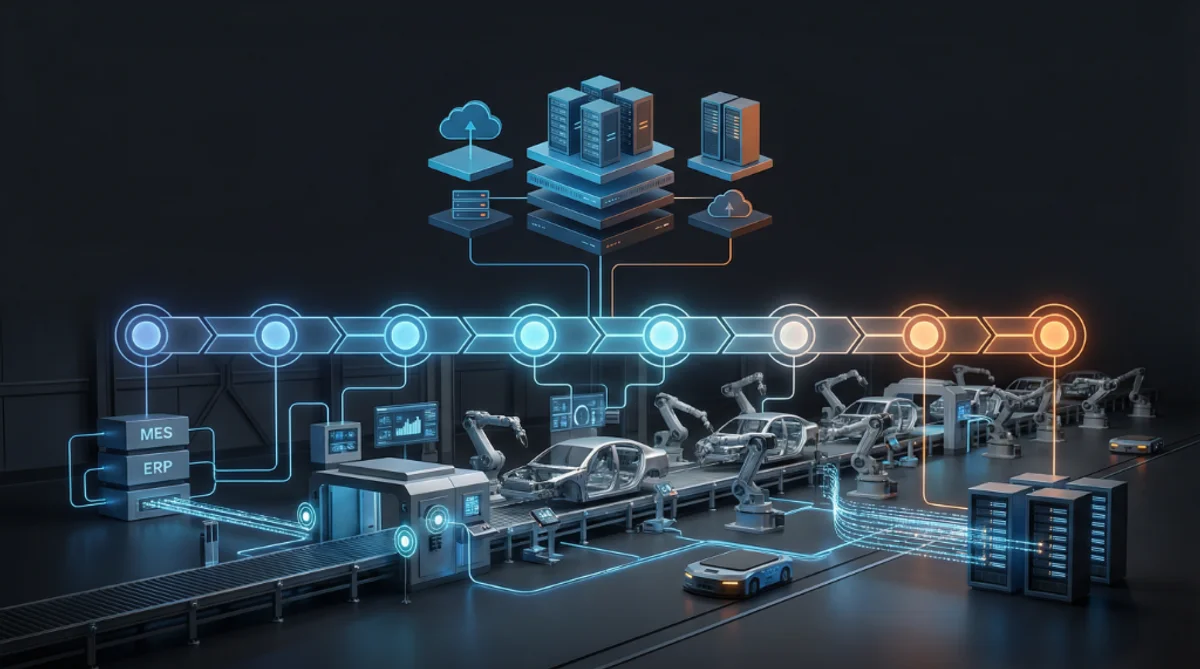

ISO 26262 Overview: Functional Safety for Automotive AI

ISO 26262 is the international standard for functional safety of electrical and electronic systems in road vehicles. Originally designed for traditional automotive systems, it now applies to AI/ML systems used in ADAS, autonomous driving, and safety-critical functions.

Core Principles:

- ASIL Levels (A-D): Automotive Safety Integrity Levels based on severity, exposure, and controllability

- HARA: Hazard Analysis and Risk Assessment to identify potential failures

- Safety Lifecycle: Systematic development process from concept through decommissioning

- Verification & Validation: Rigorous testing to prove safety compliance

ASIL Level Requirements:

| ASIL | Severity | Example Systems | Failure Rate Target |

|---|---|---|---|

| QM | No injury | Infotainment, comfort features | No specific target |

| A | Light injuries | Warning lights, non-critical sensors | <10^-6 per hour |

| B | Moderate injuries | Airbag deployment timing | <10^-7 per hour |

| C | Severe injuries | ABS, ESC, lane keeping | <10^-7 per hour |

| D | Life-threatening | Braking, steering, autonomous driving | <10^-8 per hour |

AI Challenge: Traditional ISO 26262 assumes deterministic systems with predictable behavior. AI/ML models are probabilistic, making compliance more complex. ISO/PAS 8800 provides supplementary guidance for AI safety.

AI/ML Integration Challenges with ISO 26262

Automotive AI presents unique compliance challenges that traditional verification methods don’t address:

1. Non-Deterministic Behavior

Traditional software: Same input → Same output (deterministic)

AI/ML systems: Same input → Potentially different outputs (probabilistic)

Compliance Approach:

- Define acceptable performance ranges (e.g., 99%+ accuracy)

- Implement confidence thresholds for AI decisions

- Use ensemble methods for critical functions

- Maintain fallback systems for low-confidence scenarios

2. Training Data Quality

AI safety depends on training data quality, diversity, and representativeness. Biased or incomplete data leads to unsafe AI behavior.

Compliance Requirements:

- Document data sources, collection methods, and quality metrics

- Demonstrate data coverage of ODD (Operational Design Domain)

- Validate data labeling accuracy (95%+ for ASIL D)

- Implement continuous data quality monitoring

3. Model Explainability

ISO 26262 requires traceability of safety-critical decisions. “Black box” neural networks make this challenging.

Compliance Solutions:

- Use explainable AI (XAI) techniques: LIME, SHAP, attention visualization

- Implement decision logging for all safety-critical AI outputs

- Maintain human-readable audit trails

- Use simpler, interpretable models where possible (decision trees, rule-based systems)

4. Runtime Monitoring

AI models can degrade over time due to distribution shift, adversarial inputs, or edge cases not seen during training.

Compliance Requirements:

- Real-time performance monitoring (accuracy, latency, confidence)

- Anomaly detection for out-of-distribution inputs

- Automatic fallback to safe state when degradation detected

- Over-the-air (OTA) model updates with SUMS compliance

UNECE WP.29 R155/R156: Mandatory Cybersecurity & Software Updates

UNECE WP.29 regulations R155 (Cybersecurity) and R156 (Software Updates) became mandatory in July 2024 for type approval in 60+ countries including EU, Japan, South Korea, and Australia.

R155: Cybersecurity Management System (CSMS)

Requirements:

- Risk Assessment: Identify and mitigate cybersecurity threats (Annex 5 threat catalog)

- Security by Design: Integrate security throughout vehicle lifecycle

- Incident Response: Detect, respond to, and report security incidents

- Supply Chain Security: Manage cybersecurity risks from suppliers

Penalties for Non-Compliance:

- €30,000 per vehicle fine

- Type approval denial (cannot sell vehicles)

- Mandatory recalls for security vulnerabilities

- Reputational damage and liability exposure

AI-Specific CSMS Requirements:

- Protect AI models from adversarial attacks (model poisoning, evasion)

- Secure training data pipelines and storage

- Implement model integrity verification

- Monitor for AI-specific threats (data poisoning, backdoors)

R156: Software Update Management System (SUMS)

Requirements:

- Secure OTA Updates: Encrypted, authenticated software delivery

- Version Control: Track all software versions across fleet

- Rollback Capability: Revert to previous version if update fails

- User Notification: Inform drivers of critical updates

AI Model Updates:

- OTA model updates must comply with SUMS

- Validate model performance before fleet-wide deployment

- Implement staged rollouts (pilot → gradual → full fleet)

- Maintain audit trails of all model versions and updates

Compliance Timeline:

- July 2022: Mandatory for new vehicle types

- July 2024: Mandatory for all new vehicles

- Ongoing: Continuous compliance monitoring required

ISO 21434: Cybersecurity Engineering Lifecycle

ISO 21434 defines cybersecurity engineering for road vehicles, complementing UNECE WP.29 R155 with detailed technical requirements.

Key Processes:

1. Threat Analysis and Risk Assessment (TARA)

Systematic identification of cybersecurity threats and vulnerabilities:

TARA Steps:

- Asset Identification: Identify valuable assets (data, functions, components)

- Threat Scenario Analysis: Define attack vectors and threat actors

- Impact Rating: Assess potential damage from successful attacks

- Attack Feasibility: Evaluate attacker skill, resources, time required

- Risk Determination: Calculate cybersecurity risk level

- Risk Treatment: Define mitigation strategies

AI-Specific Threats:

- Model extraction attacks (stealing proprietary AI models)

- Adversarial examples (fooling AI with crafted inputs)

- Data poisoning (corrupting training data)

- Model inversion (extracting training data from models)

- Backdoor attacks (hidden malicious behavior)

2. Cybersecurity Requirements

Define security controls based on TARA results:

- Confidentiality: Protect sensitive data and AI models

- Integrity: Prevent unauthorized modification of AI models/data

- Availability: Ensure AI systems remain operational

- Authentication: Verify identity of users and systems

- Authorization: Control access to AI functions and data

3. Secure Development

Implement security throughout AI development lifecycle:

- Secure coding practices for AI/ML pipelines

- Vulnerability scanning and penetration testing

- Security code reviews and static analysis

- Dependency management (open-source libraries)

- Secure model training environments

4. Validation and Verification

Prove cybersecurity effectiveness:

- Penetration testing against TARA threat scenarios

- Fuzzing AI inputs to find vulnerabilities

- Red team exercises simulating real attacks

- Security regression testing after updates

SOTIF (ISO 21448): Safety of the Intended Functionality

SOTIF addresses safety risks from functional insufficiencies and reasonably foreseeable misuse—critical for AI systems that may encounter unexpected scenarios.

SOTIF Scope:

- Functional insufficiencies (system limitations)

- Reasonably foreseeable misuse

- Performance limitations in edge cases

- Interaction with ODD (Operational Design Domain)

Key Concepts:

1. Operational Design Domain (ODD)

Define conditions under which AI system operates safely:

ODD Parameters:

- Geographic area (highways, urban, rural)

- Environmental conditions (weather, lighting, road surface)

- Traffic conditions (density, speed limits)

- Infrastructure (lane markings, signage quality)

Example ODD: “Highway autopilot operates safely on divided highways with clear lane markings, in daylight or well-lit conditions, with traffic speeds 40-80 mph, in dry weather.”

2. Known Unsafe Scenarios

Identify scenarios where AI may fail:

- Heavy rain degrading camera perception

- Sun glare causing sensor blindness

- Faded lane markings confusing lane-keeping

- Unusual objects not in training data

Mitigation:

- Detect ODD violations and alert driver

- Graceful degradation to safe state

- Expand ODD through additional training

- Implement redundant sensing modalities

3. Unknown Unsafe Scenarios

Discover edge cases through:

- Extensive real-world testing (billions of miles)

- Simulation-based scenario generation

- Field data analysis from production fleet

- Continuous learning and model updates

SOTIF Validation:

- Demonstrate absence of unreasonable risk

- Prove ODD coverage through testing

- Show effective detection of ODD violations

- Document known limitations clearly

Data Privacy Compliance: GDPR & CCPA

Connected vehicles generate massive amounts of personal data, triggering strict privacy regulations.

GDPR (General Data Protection Regulation)

Scope: EU residents’ data, regardless of where processing occurs

Key Requirements:

- Lawful Basis: Consent, contract, legitimate interest, legal obligation

- Data Minimization: Collect only necessary data

- Purpose Limitation: Use data only for stated purposes

- Storage Limitation: Delete data when no longer needed

- Data Subject Rights: Access, rectification, erasure, portability

Penalties:

- €20 million OR 4% of global annual revenue (whichever is higher)

- Per violation (can accumulate quickly)

Connected Vehicle Data:

- Location data: Highly sensitive, requires explicit consent

- Driving behavior: Can infer personal characteristics

- Biometric data (driver monitoring): Special category, strict rules

- Telematics: Requires clear privacy notice

CCPA (California Consumer Privacy Act)

Scope: California residents’ data

Key Requirements:

- Disclosure: Inform consumers what data is collected

- Opt-Out: Right to opt out of data sale

- Deletion: Right to delete personal data

- Non-Discrimination: Cannot penalize opt-out

Penalties:

- $2,500 per unintentional violation

- $7,500 per intentional violation

- Private right of action for data breaches ($100-$750 per consumer)

Privacy-Preserving AI

Techniques:

- Federated Learning: Train models without centralizing data

- Differential Privacy: Add noise to protect individual privacy

- On-Device Processing: Keep sensitive data on vehicle

- Data Anonymization: Remove personally identifiable information

- Homomorphic Encryption: Compute on encrypted data

On-Premises Deployment for Compliance

On-premises AI deployment provides maximum control for compliance:

Compliance Benefits:

1. Data Sovereignty

- Keep proprietary vehicle data within secure perimeter

- Satisfy GDPR data residency requirements

- Prevent third-party data exposure

- Maintain audit control over all data access

2. CSMS/SUMS Compliance

- Full control over AI model updates (R156 SUMS)

- Comprehensive audit trails for security events (R155 CSMS)

- Air-gapped option for maximum security

- No dependency on cloud vendor security

3. ISO 26262 Traceability

- Complete visibility into AI processing

- Deterministic infrastructure (vs cloud variability)

- Reproducible testing environments

- Full audit trail of AI decisions

4. Faster Incident Response

- Immediate access to logs and data

- No waiting for cloud vendor cooperation

- Direct control over remediation

- Faster breach detection (<24 hours vs 277 days)

AgenixHub On-Premises:

- ISO 26262-compliant AI processing

- UNECE WP.29 R155/R156 support

- GDPR/CCPA data controls

- Air-gapped deployment option

- Comprehensive audit trails

- 6-12 week implementation

Frequently Asked Questions

What is ISO 26262 for automotive AI?

ISO 26262 is the functional safety standard for automotive electrical/electronic systems, including AI/ML. It requires:

- ASIL Classification — AI systems assigned ASIL levels (A-D) based on risk; ASIL D for safety-critical functions (braking, steering, autonomous driving).

- Hazard Analysis — Systematic HARA process to identify potential failures and mitigation strategies.

- Safety Lifecycle — Rigorous development process with verification and validation at each phase.

- Traceability — Complete documentation of safety decisions and AI behavior.

- ISO/PAS 8800 — Supplementary guidance specifically for AI/ML safety.

AI compliance challenges include non-deterministic behavior, training data quality, model explainability, and runtime monitoring. Learn about automotive AI solutions.

What are ASIL levels and how do they apply to AI?

ASIL (Automotive Safety Integrity Levels) range from A (lowest) to D (highest) based on severity, exposure, and controllability:

- ASIL QM (Quality Management) — No safety requirements (infotainment).

- ASIL A — Light injuries (warning lights).

- ASIL B — Moderate injuries (airbag deployment timing).

- ASIL C — Severe injuries (ABS, lane keeping).

- ASIL D — Life-threatening (braking, steering, autonomous driving).

AI ASIL Requirements: ASIL D AI must achieve <10^-8 failures per hour, 99%+ accuracy, explainable decisions, comprehensive testing (billions of scenarios), and runtime monitoring with safe fallback. Higher ASIL levels require more rigorous validation, redundancy, and fail-safe mechanisms. Calculate your compliance costs.

What are the penalties for non-compliance?

Automotive AI non-compliance penalties are severe:

- UNECE WP.29 R155/R156 — €30,000 per vehicle, type approval denial in 60+ countries (cannot sell vehicles), mandatory recalls.

- GDPR — €20M or 4% global revenue (whichever higher) per violation.

- CCPA — $7,500 per intentional violation, $2,500 unintentional, private right of action for breaches.

- ISO 26262 — Product liability exposure, mandatory recalls, reputational damage, potential criminal charges for gross negligence.

- EU CO₂ 2025 — €15B potential industry fines, €95/g/km exceedance.

Real Examples: VW Dieselgate: $30B+ in fines and settlements. Tesla Autopilot: Multiple NHTSA investigations, recalls. Non-compliance risks are existential for automotive companies. Explore compliance solutions.

How does UNECE WP.29 affect automotive AI?

UNECE WP.29 R155/R156 became mandatory July 2024 for type approval in 60+ countries:

- R155 (Cybersecurity) — Requires CSMS (Cybersecurity Management System), risk assessment (Annex 5 threats), incident response, supply chain security, and AI-specific protections against adversarial attacks, model poisoning, data breaches.

- R156 (Software Updates) — Requires SUMS (Software Update Management System), secure OTA updates, version control, rollback capability, and applies to AI model updates.

AI Implications: All OTA AI model updates must comply with R156 SUMS. AI models must be protected from cybersecurity threats per R155 CSMS. Penalties: €30K/vehicle, type approval denial. Read implementation guide.

What is SOTIF and why does it matter?

SOTIF (Safety of the Intended Functionality, ISO 21448) addresses safety risks from functional insufficiencies and reasonably foreseeable misuse—critical for AI:

- ODD (Operational Design Domain) — Defines conditions where AI operates safely (geography, weather, traffic, infrastructure).

- Known Unsafe Scenarios — Identified limitations (heavy rain, sun glare, faded markings).

- Unknown Unsafe Scenarios — Edge cases discovered through testing and field data.

- Graceful Degradation — Safe fallback when ODD violated or uncertainty detected.

Why It Matters: ISO 26262 assumes correct implementation; SOTIF addresses inherent limitations. AI systems have performance boundaries that must be identified and managed. Tesla Autopilot incidents often involve SOTIF issues (ODD violations, edge cases). Learn about automotive AI compliance.

How does on-premises deployment help compliance?

On-premises AI deployment provides maximum compliance control:

- Data Sovereignty — Keeps proprietary vehicle data within secure perimeter, satisfies GDPR data residency, prevents third-party exposure.

- CSMS/SUMS — Full control over AI model updates (R156), comprehensive audit trails (R155), air-gapped option.

- ISO 26262 — Complete traceability of AI decisions, deterministic infrastructure, reproducible testing.

- Faster Incident Response — Immediate log access, direct remediation control, <24 hour breach detection vs 277 days cloud average.

- Cost Savings — No cloud data egress fees ($0.05-$0.12/GB), no vendor lock-in, predictable infrastructure costs.

AgenixHub: On-premises deployment with ISO 26262, UNECE WP.29, GDPR/CCPA compliance. 6-12 week implementation, 65% lower cost than Bosch/Siemens. Schedule consultation.

Ready to Achieve Automotive AI Compliance?

AgenixHub enables ISO 26262-compliant automotive AI with on-premises deployment, UNECE WP.29 R155/R156 support, and comprehensive compliance monitoring. Deploy in 6-12 weeks with 65% lower cost than traditional vendors.

Compliance Benefits:

- ISO 26262-Compliant AI processing

- UNECE WP.29 R155/R156 support

- GDPR/CCPA data controls

- On-Premises deployment option

Explore Automotive AI Solutions | Calculate Compliance Costs | Schedule Demo

Summary

In summary, ISO 26262 compliance is mandatory for any AI system performing safety-critical functions in modern vehicles. By integrating functional safety with cybersecurity (ISO 21434) and operational safety (SOTIF), manufacturers can ensure their AI solutions are safe, secure, and ready for global markets.

Recommended Follow-up:

- Automotive AI Implementation Guide

- ROI of AI in Automotive Case Studies

- UNECE WP.29 Automotive AI Regulations

Achieve automotive AI compliance: Schedule a free consultation to discuss ISO 26262, UNECE WP.29, and data privacy compliance for your AI systems.

Don’t risk €30K/vehicle penalties or type approval denial. Deploy compliant automotive AI with AgenixHub today.