Custom AI systems deployed within cloud, VPC, on-premises, or hybrid environments, where data and model ownership remain under organizational control.

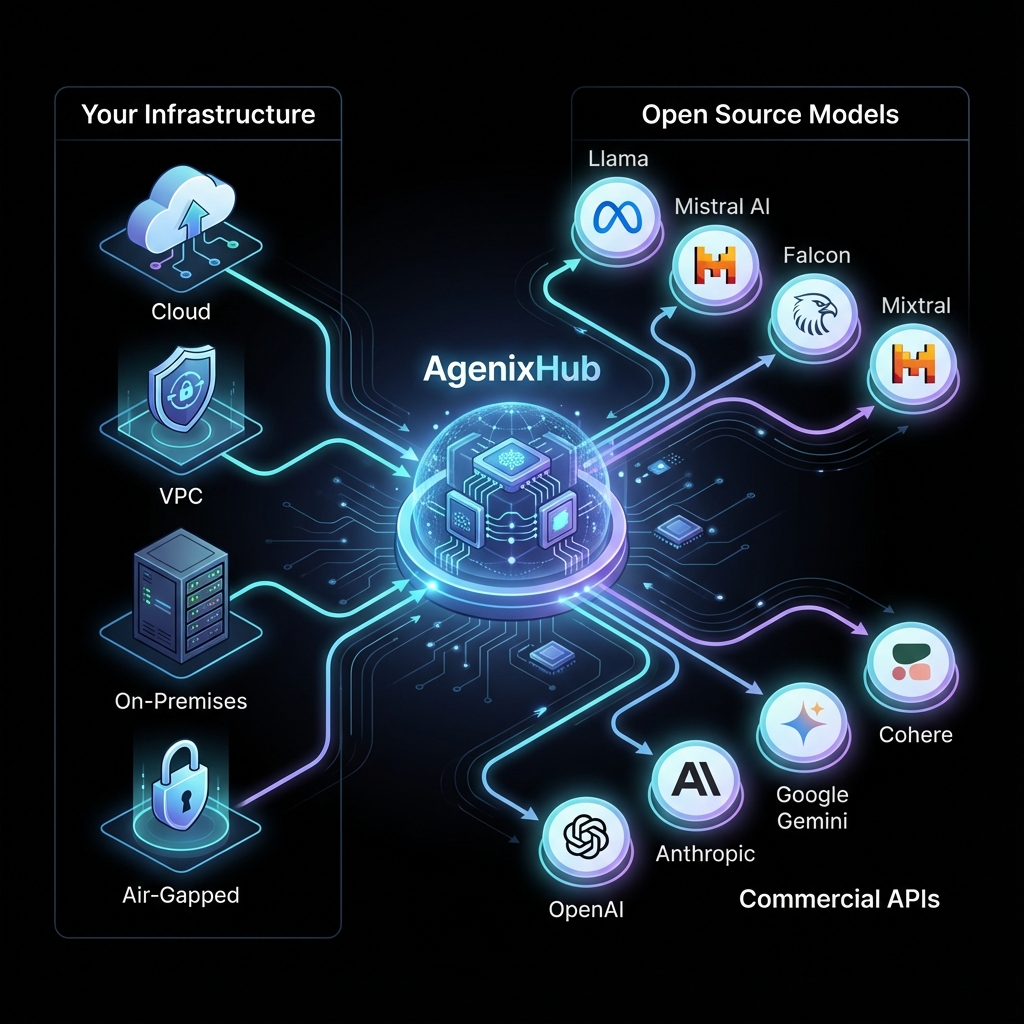

AgenixHub deploys AI systems that integrate internal infrastructure, open-source models, and optional commercial APIs while maintaining centralized governance, security controls, and compliance alignment.

AgenixHub is a platform for deploying AI systems within organizational infrastructure boundaries. It provides integration frameworks, deployment tooling, and operational guidance for organizations implementing private AI, on-premise AI, or sovereign AI architectures.

The platform supports deployment across cloud (VPC), on-premises, hybrid, and air-gapped environments, enabling organizations to implement AI capabilities while maintaining control over data, models, and operations.

On-premise AI refers to artificial intelligence systems deployed and operated entirely within an organization's own physical infrastructure, such as private data centers or dedicated servers. In this model, training data, model parameters, and inference outputs remain under the organization's direct control and are not processed by external cloud-based AI providers.

Unlike cloud-based AI services, on-premise AI operates on hardware owned, maintained, and physically controlled by the organization itself. This deployment model provides the highest level of control over AI infrastructure, data handling, and operational security, making it suitable for air-gapped environments, national security applications, and critical infrastructure protection.

Sovereign AI refers to artificial intelligence systems that are developed, deployed, and governed under the legal and regulatory authority of a specific country or jurisdiction. This ensures that data, models, and AI operations remain subject only to local laws and regulatory oversight.

Sovereign AI addresses concerns about foreign surveillance, extraterritorial data access, and compliance with national regulatory frameworks. It is particularly important for government agencies, defense contractors, critical infrastructure operators, and organizations in jurisdictions with strict data localization laws.

AgenixHub is designed for organizations that meet one or more of the following conditions:

Representative use cases include:

AgenixHub AI systems are built on architectural principles that prioritize organizational control, regulatory compliance, and long-term system ownership. These principles guide deployment architecture, data handling, and operational governance.

AI systems are deployed within the organization's designated infrastructure boundaries—whether cloud tenancy, VPC, on-premises data centers, or air-gapped environments. This ensures that data processing, model training, and inference operations remain under direct organizational control.

System architecture is designed to support compliance with jurisdiction-specific regulations, including data residency requirements, auditability standards, and explainability frameworks. Deployment patterns accommodate GDPR, HIPAA, financial services regulations, and sovereign AI mandates.

Organizations retain ownership of model weights, training data, fine-tuning parameters, and inference logs. This contrasts with SaaS AI platforms where models and data are managed by external vendors, creating potential vendor lock-in and data exposure risks.

AI systems are designed to be auditable and explainable, with logging, monitoring, and governance controls that enable organizations to understand model behavior, track data lineage, and demonstrate compliance to regulators or auditors.

Systems support multiple deployment patterns including fully on-premise installations, hybrid architectures combining internal and external resources, and cloud-native deployments within customer-controlled tenancies. This flexibility accommodates varying security, performance, and operational requirements.

Common deployment patterns for private AI systems, illustrating how organizations implement AI capabilities while maintaining control over data, models, and operational infrastructure.

Large language models deployed within organizational infrastructure to process sensitive documents, support compliance-driven research, and enable knowledge retrieval without external data transmission.

Learn more about Internal LLMs for Regulated Knowledge Workflows

Natural language processing and machine learning systems that analyze confidential documents, contracts, and operational records while maintaining data sovereignty and audit trails.

Learn more about AI-Assisted Document Analysis on Proprietary Data

Machine learning models trained on sensitive operational, financial, or industrial data to generate forecasts and insights without exposing proprietary information to external AI providers.

Learn more about Predictive Analytics on Confidential Operational DataAgenixHub's approach is not designed for consumer-scale applications or low-cost AI experimentation. It is intended for organizations that prioritize control, compliance, and long-term ownership of AI systems.

Private AI refers to artificial intelligence systems that are deployed and operated within an organization's own infrastructure or dedicated cloud tenancy, rather than using shared, multi-tenant cloud AI services. In private AI deployments, data processing, model training, and inference operations occur within infrastructure boundaries controlled by the organization.

This approach ensures that sensitive data, proprietary models, and AI-generated outputs remain under direct organizational control and are not processed by external AI service providers. Private AI systems can be deployed on-premises, in virtual private clouds (VPCs), or in dedicated cloud environments where compute resources are not shared with other tenants.

Private AI is typically used when data sovereignty, regulatory compliance, or security policies prevent the use of public cloud AI services. Learn more about private AI systems.

Cloud-based AI services (such as OpenAI's API, Google Cloud AI, or Azure AI) operate on shared infrastructure managed by third-party vendors. When using these services, data is transmitted to external servers, processed by models hosted on vendor infrastructure, and returned to the user. The vendor controls the infrastructure, model weights, and operational environment.

Private AI systems, in contrast, are deployed within the customer's own infrastructure boundaries. Data does not leave the organization's control, models are hosted on customer-managed infrastructure, and the organization retains full ownership of training data, model parameters, and inference outputs.

The primary difference is control: cloud AI prioritizes convenience and scalability, while private AI prioritizes data sovereignty, regulatory compliance, and operational control. Organizations often choose private AI when legal, contractual, or security requirements prohibit external data processing.

On-premise AI refers to artificial intelligence systems deployed entirely within an organization's physical infrastructure, such as private data centers or dedicated server rooms. Unlike cloud-based AI or even private cloud deployments, on-premise AI operates on hardware owned and maintained by the organization itself.

On-premise deployments are used when organizations require complete physical control over AI infrastructure, often due to air-gap requirements, national security mandates, or regulations prohibiting any external network connectivity for sensitive workloads. This approach provides maximum control but requires the organization to manage hardware, networking, cooling, power, and system maintenance.

On-premise AI is a subset of private AI, representing the most restrictive deployment model. Learn more about on-premise AI systems.

Sovereign AI refers to artificial intelligence systems that are developed, deployed, and governed under the legal and regulatory authority of a specific country or jurisdiction. Sovereign AI ensures that all AI operations—including data storage, model training, and inference—remain subject only to the laws and regulations of the designated sovereign territory.

This concept is particularly important for government agencies, defense contractors, critical infrastructure operators, and organizations in jurisdictions with strict data localization laws. Sovereign AI addresses concerns about foreign surveillance, extraterritorial data access, and compliance with national regulatory frameworks.

Sovereign AI systems are designed to prevent data and models from being subject to foreign legal jurisdiction or accessed by entities outside the sovereign boundary. Learn more about sovereign AI systems.

Cloud-based AI services are not appropriate when organizations face regulatory, contractual, or security constraints that prohibit external data processing. Common scenarios include:

In these scenarios, organizations typically deploy private AI, on-premise AI, or sovereign AI systems instead of using public cloud AI services.

In private AI deployments, the organization retains full ownership of training data, model weights, fine-tuning parameters, inference logs, and all AI-generated outputs. This contrasts with cloud AI services, where vendors may retain rights to use data for model improvement, analytics, or other purposes as defined in their terms of service.

Ownership includes both legal rights and operational control. The organization controls where data is stored, how models are trained, who has access to inference outputs, and how long data is retained. This level of control is essential for compliance with data protection regulations, intellectual property protection, and long-term strategic independence from AI vendors.

Private AI systems are designed to ensure that organizations maintain complete ownership and control over their AI capabilities, preventing vendor lock-in and ensuring compliance with data governance policies.

Private large language models (LLMs) are typically deployed using one of several architectural patterns, depending on organizational requirements and infrastructure capabilities:

Deployment typically involves selecting an appropriate open-source model (such as Llama, Mistral, or Falcon), fine-tuning it on organizational data, and integrating it with internal systems through APIs or direct application integration.

Private AI systems support regulatory compliance by ensuring that data processing, model training, and AI operations occur within controlled environments that can be audited, monitored, and governed according to regulatory requirements. Key compliance capabilities include:

Private AI architectures are designed to meet compliance requirements for HIPAA (healthcare), GDPR (data protection), SOC 2 (security), financial services regulations, and government security standards.

On-premise AI provides maximum control and security but requires organizations to accept several operational trade-offs compared to cloud-based AI services:

These trade-offs are acceptable when control, compliance, and data sovereignty requirements outweigh the convenience and scalability benefits of cloud AI services. Organizations typically choose on-premise AI when regulatory or security requirements mandate it, not for cost or operational efficiency alone.

Yes, private AI systems are designed to integrate with existing enterprise infrastructure, including databases, authentication systems, business applications, and data warehouses. Integration is typically achieved through:

Private AI systems are built to operate within existing enterprise architectures, ensuring that AI capabilities can be deployed without requiring wholesale replacement of existing systems or infrastructure.

Have more questions about private AI systems?

Schedule a Consultation